Project publications will be listed here.

2024 |

|

Lygerakis, Fotios; Dave, Vedant; Rueckert, Elmar M2CURL: Sample-Efficient Multimodal Reinforcement Learning via Self-Supervised Representation Learning for Robotic Manipulation Proceedings Article In: IEEE International Conference on Ubiquitous Robots (UR 2024), IEEE 2024. Links | BibTeX | Tags: Reinforcement Learning, Tactile Sensing, University of Leoben @inproceedings{Lygerakis2024, |  |

Dave*, Vedant; Lygerakis*, Fotios; Rueckert, Elmar Multimodal Visual-Tactile Representation Learning through Self-Supervised Contrastive Pre-Training Proceedings Article In: IEEE International Conference on Robotics and Automation (ICRA 2024)., 2024, (* equal contribution). Links | BibTeX | Tags: deep learning, Tactile Sensing, University of Leoben @inproceedings{Dave2024b, |  |

2022 |

|

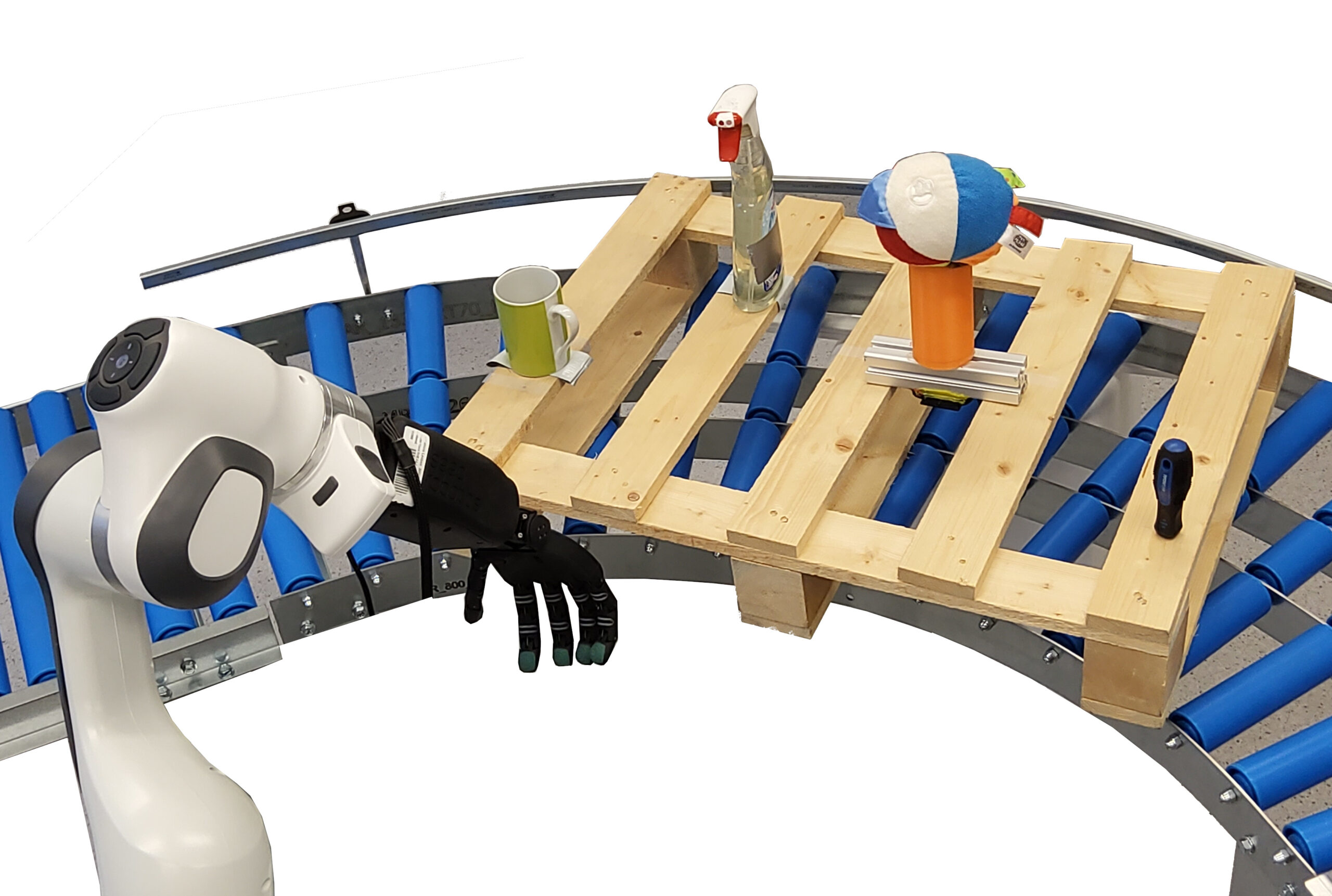

Dave, Vedant; Rueckert, Elmar Can we infer the full-arm manipulation skills from tactile targets? Workshop 2022, (Workshop in the International Conference on Humanoid Robots (Humanoids 2022)). Abstract | Links | BibTeX | Tags: Grasping, Manipulation, Movement Primitives, Tactile Sensing, University of Leoben @workshop{Dave2022WS,Tactile sensing provides significant information about the state of the environment for performing manipulation tasks. Manipulation skills depends on the desired initial contact points between the object and the end-effector. Based on physical properties of the object, this contact results into distinct tactile responses. We propose Tactile Probabilistic Movement Primitives (TacProMPs), to learn a highly non-linear relationship between the desired tactile responses and the full-arm movement, where we condition solely on the tactile responses to infer the complex manipulation skills. We use a Gaussian mixture model of primitives to address the multimodality in demonstrations. We demonstrate the performance of our method in challenging real-world scenarios. |  |

Dave, Vedant; Rueckert, Elmar Predicting full-arm grasping motions from anticipated tactile responses Proceedings Article In: International Conference on Humanoid Robots (Humanoids 2022), 2022. Abstract | Links | BibTeX | Tags: Grasping, Manipulation, Movement Primitives, Tactile Sensing @inproceedings{Dave2022,Tactile sensing provides significant information about the state of the environment for performing manipulation tasks. Depending on the physical properties of the object, manipulation tasks can exhibit large variation in their movements. For a grasping task, the movement of the arm and of the end effector varies depending on different points of contact on the object, especially if the object is non-homogeneous in hardness and/or has an uneven geometry. In this paper, we propose Tactile Probabilistic Movement Primitives (TacProMPs), to learn a highly non-linear relationship between the desired tactile responses and the full-arm movement. We solely condition on the tactile responses to infer the complex manipulation skills. We formulate a joint trajectory of full-arm joints with tactile data, leverage the model to condition on the desired tactile response from the non-homogeneous object and infer the full-arm (7-dof panda arm and 19-dof gripper hand) motion. We use a Gaussian Mixture Model of primitives to address the multimodality in demonstrations. We also show that the measurement noise adjustment must be taken into account due to multiple systems working in collaboration. We validate and show the robustness of the approach through two experiments. First, we consider an object with non-uniform hardness. Grasping from different locations require different motion, and results into different tactile responses. Second, we have an object with homogeneous hardness, but we grasp it with widely varying grasping configurations. Our result shows that TacProMPs can successfully model complex multimodal skills and generalise to new situations. |  |