2024

|

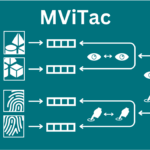

Lygerakis, Fotios; Dave, Vedant; Rueckert, Elmar M2CURL: Sample-Efficient Multimodal Reinforcement Learning via Self-Supervised Representation Learning for Robotic Manipulation Proceedings Article In: IEEE International Conference on Ubiquitous Robots (UR 2024), IEEE 2024. @inproceedings{Lygerakis2024,

title = {M2CURL: Sample-Efficient Multimodal Reinforcement Learning via Self-Supervised Representation Learning for Robotic Manipulation},

author = {Fotios Lygerakis and Vedant Dave and Elmar Rueckert},

url = {https://cloud.cps.unileoben.ac.at/index.php/s/NPejb2Fp4Y8LeyZ},

year = {2024},

date = {2024-04-04},

booktitle = {IEEE International Conference on Ubiquitous Robots (UR 2024)},

organization = {IEEE},

keywords = {Reinforcement Learning, Tactile Sensing, University of Leoben},

pubstate = {published},

tppubtype = {inproceedings}

}

|  |

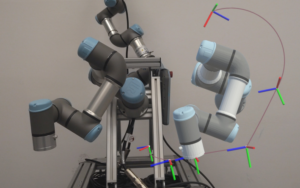

Feith, Nikolaus; Rueckert, Elmar Advancing Interactive Robot Learning: A User Interface Leveraging Mixed Reality and Dual Quaternions Proceedings Article In: IEEE International Conference on Ubiquitous Robots (UR 2024), 2024. @inproceedings{Feith2024B,

title = {Advancing Interactive Robot Learning: A User Interface Leveraging Mixed Reality and Dual Quaternions},

author = {Nikolaus Feith and Elmar Rueckert},

url = {https://cloud.cps.unileoben.ac.at/index.php/s/qCgzbmCJYSH3F97},

year = {2024},

date = {2024-04-04},

urldate = {2024-04-04},

booktitle = {IEEE International Conference on Ubiquitous Robots (UR 2024)},

keywords = {Human-Robot-Interaction, University of Leoben},

pubstate = {published},

tppubtype = {inproceedings}

}

|  |

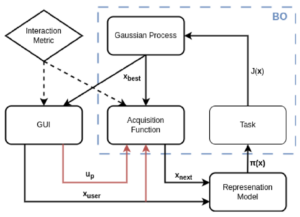

Feith, Nikolaus; Rueckert, Elmar Integrating Human Expertise in Continuous Spaces: A Novel Interactive Bayesian Optimization Framework with Preference Expected Improvement Proceedings Article In: IEEE International Conference on Ubiquitous Robots (UR 2024), IEEE 2024. @inproceedings{Feith2024A,

title = {Integrating Human Expertise in Continuous Spaces: A Novel Interactive Bayesian Optimization Framework with Preference Expected Improvement},

author = {Nikolaus Feith and Elmar Rueckert},

url = {https://cloud.cps.unileoben.ac.at/index.php/s/6rTWAkoXa3zsJxf},

year = {2024},

date = {2024-04-04},

urldate = {2024-04-04},

booktitle = {IEEE International Conference on Ubiquitous Robots (UR 2024)},

organization = {IEEE},

keywords = {Human-Robot-Interaction, University of Leoben},

pubstate = {published},

tppubtype = {inproceedings}

}

|  |

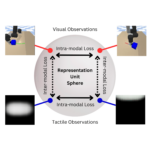

Dave*, Vedant; Lygerakis*, Fotios; Rueckert, Elmar Multimodal Visual-Tactile Representation Learning through Self-Supervised Contrastive Pre-Training Proceedings Article In: IEEE International Conference on Robotics and Automation (ICRA 2024)., 2024, (* equal contribution). @inproceedings{Dave2024b,

title = {Multimodal Visual-Tactile Representation Learning through Self-Supervised Contrastive Pre-Training},

author = {Vedant Dave* and Fotios Lygerakis* and Elmar Rueckert},

url = {https://cloud.cps.unileoben.ac.at/index.php/s/Nw9TprfdDoLgr8e},

year = {2024},

date = {2024-01-28},

urldate = {2024-01-28},

booktitle = {IEEE International Conference on Robotics and Automation (ICRA 2024).},

note = {* equal contribution},

keywords = {deep learning, Tactile Sensing, University of Leoben},

pubstate = {published},

tppubtype = {inproceedings}

}

|  |

2023

|

Lygerakis, Fotios; Rueckert, Elmar CR-VAE: Contrastive Regularization on Variational Autoencoders for Preventing Posterior Collapse Proceedings Article In: Asian Conference of Artificial Intelligence Technology (ACAIT)., 2023. @inproceedings{Lygerakis2023,

title = {CR-VAE: Contrastive Regularization on Variational Autoencoders for Preventing Posterior Collapse},

author = {Fotios Lygerakis and Elmar Rueckert},

url = {https://cloud.cps.unileoben.ac.at/index.php/s/fNNRnzJFPrtGQM2},

year = {2023},

date = {2023-08-16},

urldate = {2023-08-16},

booktitle = {Asian Conference of Artificial Intelligence Technology (ACAIT).},

keywords = {computer vision, deep learning, University of Leoben},

pubstate = {published},

tppubtype = {inproceedings}

}

|  |

2022

|

Dave, Vedant; Rueckert, Elmar Can we infer the full-arm manipulation skills from tactile targets? Workshop 2022, (Workshop in the International Conference on Humanoid Robots (Humanoids 2022)). @workshop{Dave2022WS,

title = {Can we infer the full-arm manipulation skills from tactile targets?},

author = {Vedant Dave and Elmar Rueckert},

url = {https://cloud.cps.unileoben.ac.at/index.php/f/562953},

year = {2022},

date = {2022-11-28},

urldate = {2022-11-28},

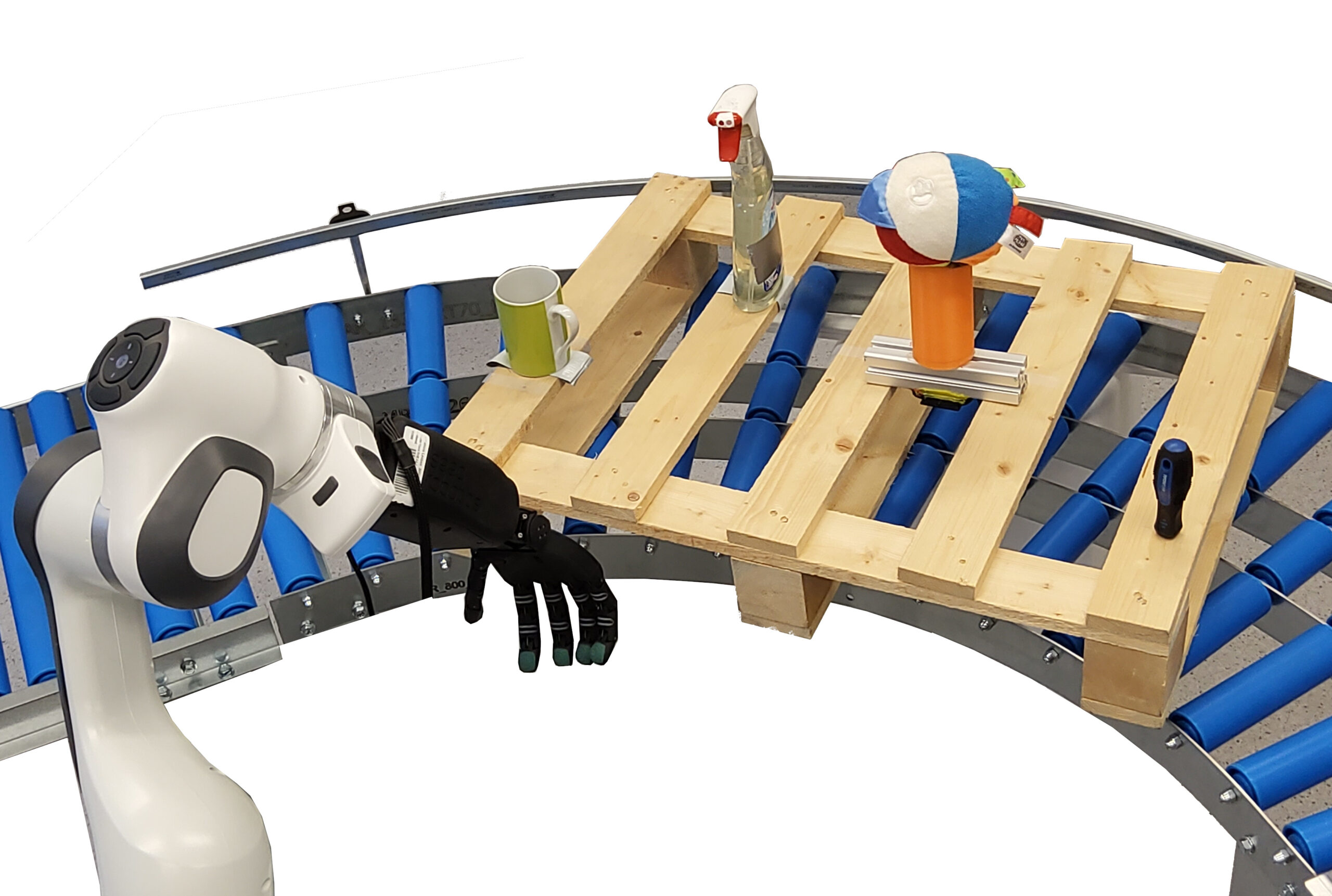

abstract = {Tactile sensing provides significant information about the state of the environment for performing manipulation tasks. Manipulation skills depends on the desired initial contact points between the object and the end-effector. Based on physical properties of the object, this contact results into distinct tactile responses. We propose Tactile Probabilistic Movement Primitives (TacProMPs), to learn a highly non-linear relationship between the desired tactile responses and the full-arm movement, where we condition solely on the tactile responses to infer the complex manipulation skills. We use a Gaussian mixture model of primitives to address the multimodality in demonstrations. We demonstrate the performance of our method in challenging real-world scenarios.},

note = {Workshop in the International Conference on Humanoid Robots (Humanoids 2022)},

keywords = {Grasping, Manipulation, Movement Primitives, Tactile Sensing, University of Leoben},

pubstate = {published},

tppubtype = {workshop}

}

Tactile sensing provides significant information about the state of the environment for performing manipulation tasks. Manipulation skills depends on the desired initial contact points between the object and the end-effector. Based on physical properties of the object, this contact results into distinct tactile responses. We propose Tactile Probabilistic Movement Primitives (TacProMPs), to learn a highly non-linear relationship between the desired tactile responses and the full-arm movement, where we condition solely on the tactile responses to infer the complex manipulation skills. We use a Gaussian mixture model of primitives to address the multimodality in demonstrations. We demonstrate the performance of our method in challenging real-world scenarios. |  |